What happens when you are convinced you were scammed by AI but no one believes you? It’s a phenomenon that is happening with increasing regularity.

A Reddit User and mother from Illinois recently fell victim to what she described as an “AI phone scam” , losing thousands of dollars and suffering severe emotional trauma.

She claimed AI was used to clone her daughter’s voice to carry out a treacherous “kidnapping scam” that had her on the phone for hours trying to free her daughter.

Her claim that AI was used however kicked off a heated debate with many people expressing doubt that artificial intelligence was used.

How The Scam Started – Persistant Calls

Her phone rang once. Then again, and again and again. Each time the call came through she ignored it. But then finally, she decided to answer it.

When she finally answered, she heard what sounded like her daughter crying, repeatedly apologizing about being in an accident.

Then her daughter passed the phone to a man that took over the call. He said her daughter had been taking photos at an accident scene—something the scammer couldn’t allow because he was “doing something illegal.”

He told the mother he had panicked and taken her daughter to another location. It all sounded so real.

And it wasn’t like she just talked to her daughter once. It was many times.

“This man let me talk to her twice or three times. She even responded to me,” the victim explained. “This man knew where she was, where I was, and apparently a lot more about us.”

They Kept Her On The Phone For Hours

The harrowing scam soon turned to extortion. The scammer kept the mother constantly moving and her hands busy, directing her to drive to various locations while keeping up his threats.

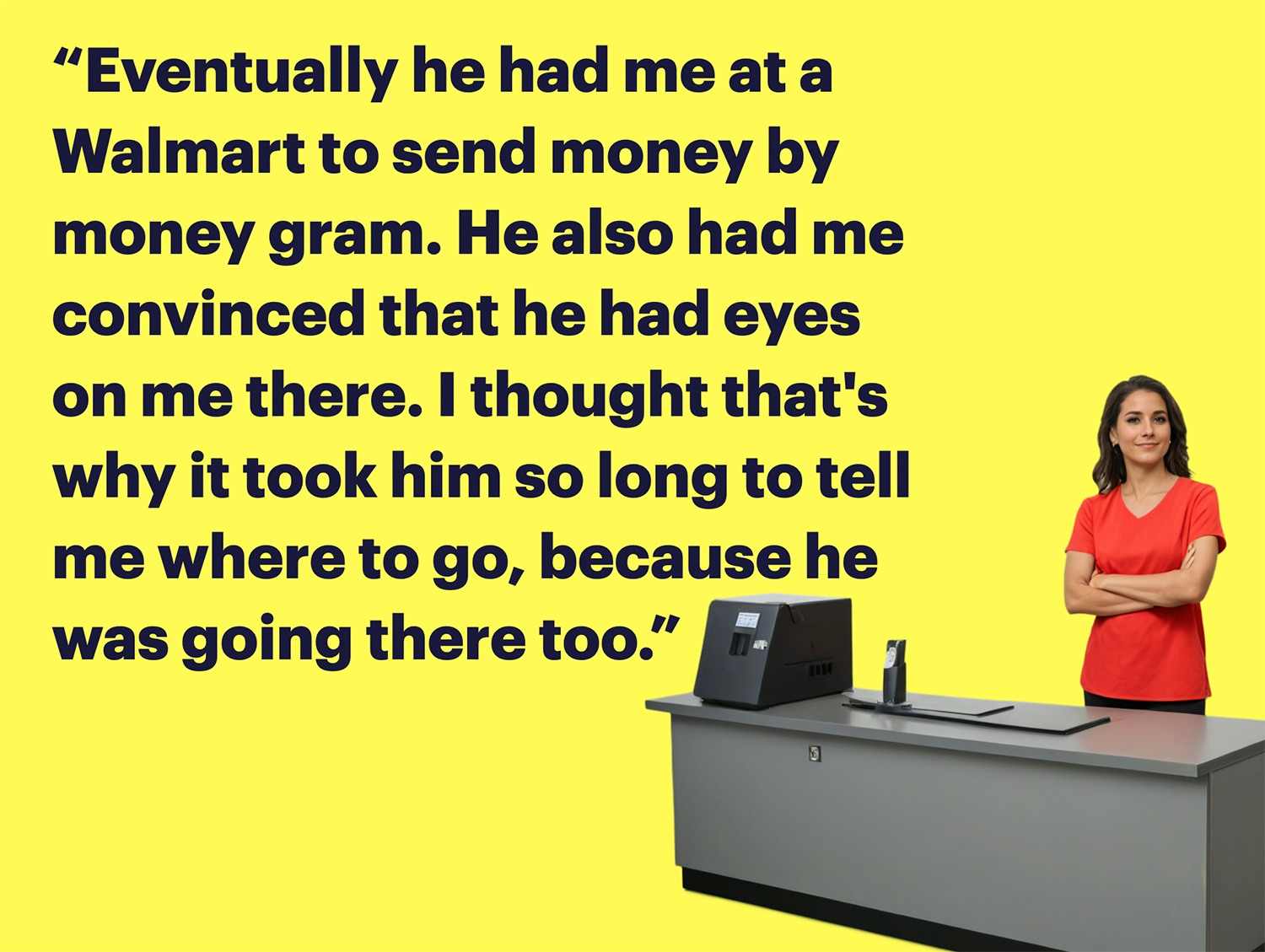

When it came time to collect the ransom, he directed her to a Walmart to send money through Money Gram, convincing her he had “eyes on her” The scammer even “let” her take a call from her husband so he “wouldn’t get suspicious” but forced her to add the scammer to the call.

After she paid, the scam wasn’t even over. The scammer continued to terrorize her.

“Even after I sent the money,” she said, “he had me driving around to “pick her up”. But he kept changing the location.”

This was a stall tactic by the scammer until he could insure he collected the money before letting her go.

She Was Ashamed and Humiliated

After the harrowing journey, the mother was a wreck. She says she ended up sleeping for 24 hours straight because she felt exhausted and ashamed.

“I lost a lot of money I didn’t have to lose. I am humiliated and extremely traumatized.” she said, “This was a few days ago and I think I’ve been in shock because I slept over 24 hours. I am so ashamed”.

She posted her experience on social media to warn others. But it didn’t end there.

Experts Say It Wasn’t AI

You would think the mother would get sympathy. And she did from some. But others were critical of her, and experts said she was mistaken – it wasn’t AI at all.

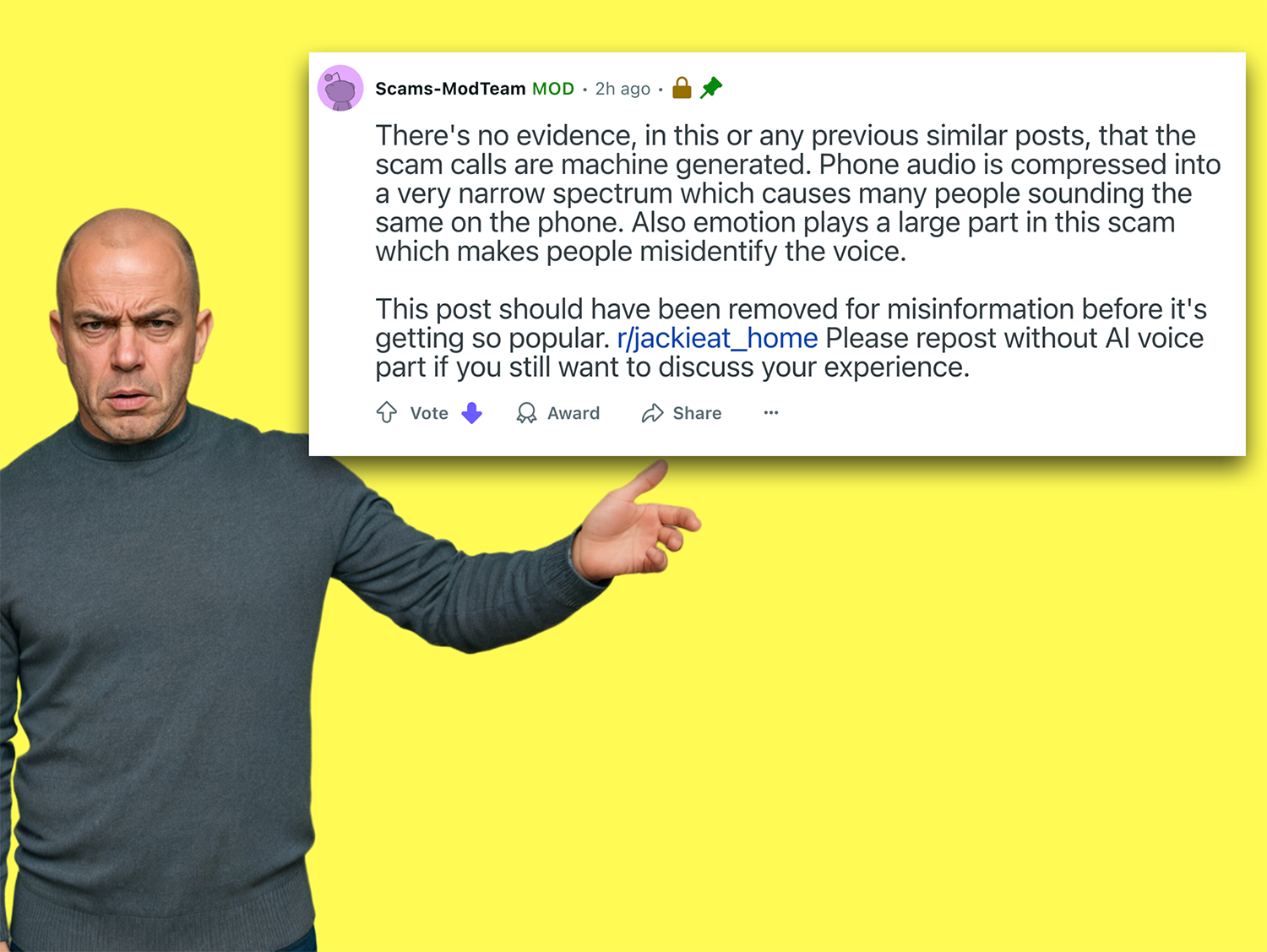

A moderator on the r/Scams subreddit cast doubt on her claim as disinformation: “There’s no evidence, in this or any previous similar posts, that the scam calls are machine generated,” the moderator wrote.

“Phone audio is compressed into a very narrow spectrum which causes many people sounding the same on the phone. Also emotion plays a large part in this scam which makes people misidentify the voice.”

And the moderators weren’t the only ones that were skeptical.

Other commenters echoed what they said. “AI voices in real time aren’t really that good yet, it’s likely it was just any girl they already have working there,” wrote one user.

Another explained, “These scams are never ‘AI’, it’s simply not technically feasible yet to run this scam with real-time AI and more importantly – it’s not at all necessary.”

Not So Fast – Yes AI Clones In Realtime Are Real

Despite these claims, some experts argue that real-time or near-real-time AI voice cloning is increasingly possible.

A growing industry of AI voice cloning services has emerged, with companies like ElevenLabs offering “advanced voice cloning with as little as a few seconds of audio”.

And those clones can be used via API services which can carry on conversations with the help of ChatGPT and others. HeyGen for example released “Interactive Avatars” that offer integration into ElevenLabs to carry out realtime conversations.

“It is technically possible to pull this off,” commented one user claiming to work in fraud prevention. “Banks are reporting deepfakes where the scammer clones a voice on like ElevenLabs, then they can type in the responses and generate the audio clips in semi-real-time.”

It’s important that people understand that these are happening now and they are incredibly real.

The Aftermath – “I Am Glad It Wasn’t Real”

As for the victim in the scam, she is just thankful the whole thing is over.

Whether AI was involved or not, the psychological impact on the victim has been severe. She reported sleeping for over 24 hours afterward, likely from emotional shock, and continues to struggle with sleep without medication.

“When it turned out she was okay, I didn’t even care about the money,” she wrote. “I was so glad it wasn’t real.”