Lemonade, (not the drink) but the insurance startup is under fire for bragging that they use Ai to analyze up to 1,600 data points on insurance claims made via their video app, and then denying those claims if they suspect fraud.

And it didn’t go very well at all. Twitter and social media erupted when Lemonade boasted about this special use of AI to root out fraud claims.

The company has rocketed to one of the fastest growing insurance companies by using Ai and Chatbots to process insurance claims in under 3 seconds flat.

The company, which offers renters, homeowners, pet insurance and life insurance appeals to millennials. They have over 1 million customers. The average age of their customers is 35. But the highly digital experience also appeals to fraudsters who like the fast and easy nature of their claims process.

They Tweeted About Their Unique Ai Approach

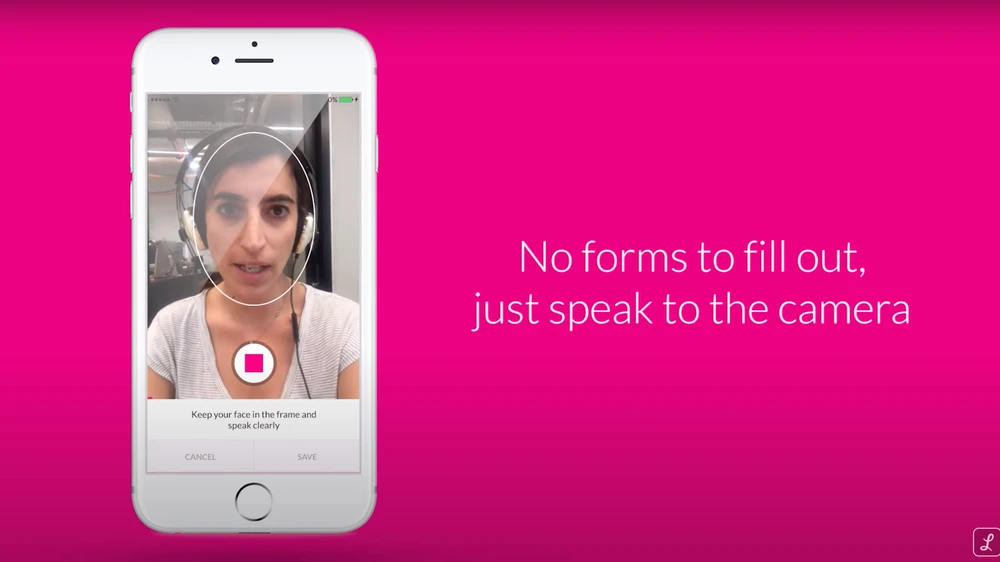

Lemonade is unique in that policy holders can submit claims by sending in a video message describing what happened and what their claim is for. They claim that this convenience is something customers love. There are no forms to fill out, you just speak to the camera and submit the claim.

But the company claims that they use that video to also detect if people might be lying on their claims.

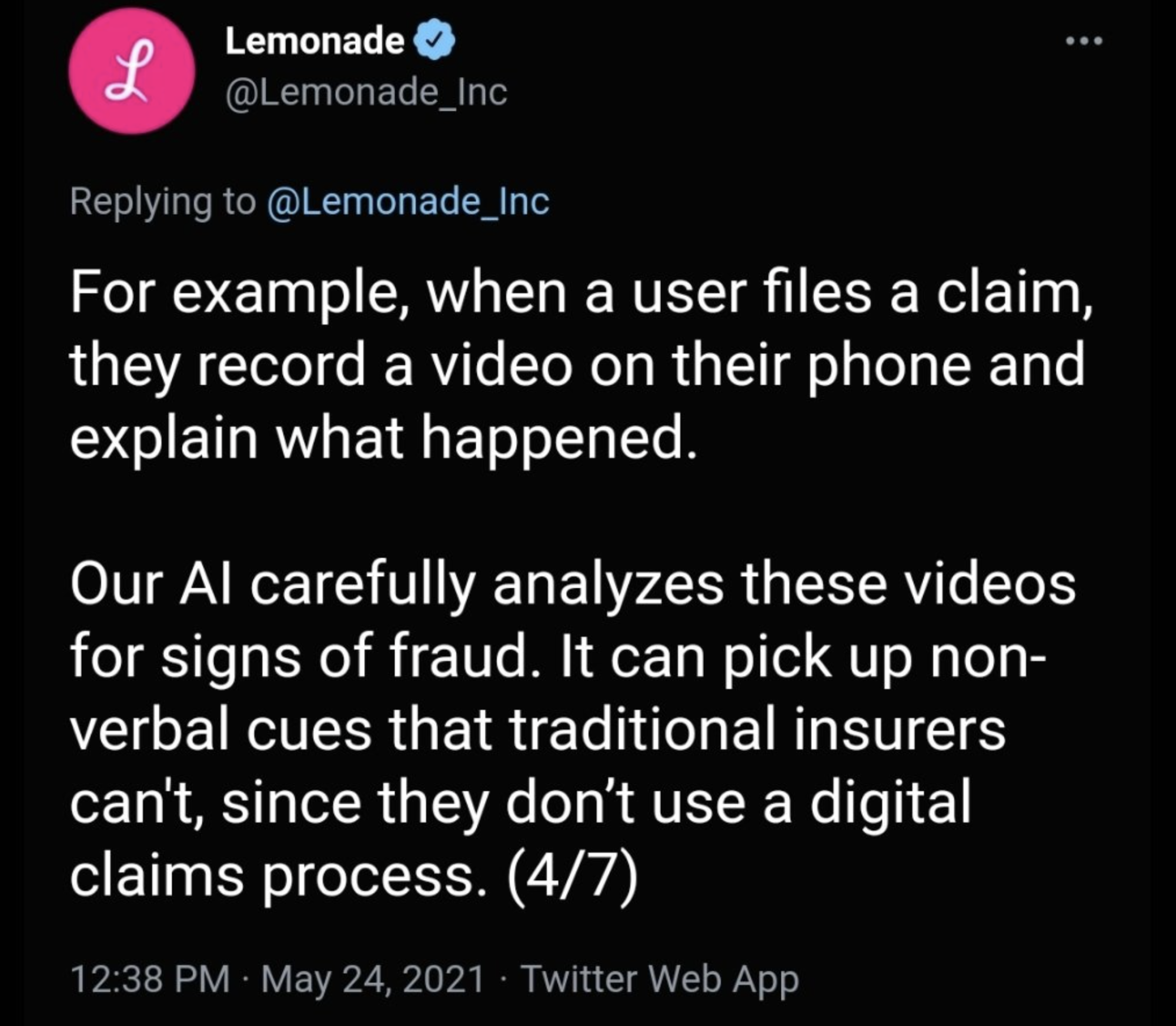

In a Twitter thread Monday that the company later deleted, Lemonade announced that the customer service AI chatbots it uses collect as much as 1,600 data points from a single video of a customer answering 13 questions.

“Our AI carefully analyzes these videos for signs of fraud. It can pick up non-verbal cues that traditional insurers can’t, since they don’t use a digital claims process,” the company said in a now-deleted tweet. The thread implied that Lemonade was able to detect whether a person was lying in their video and could thus decline insurance claims if its AI believed a person was lying.

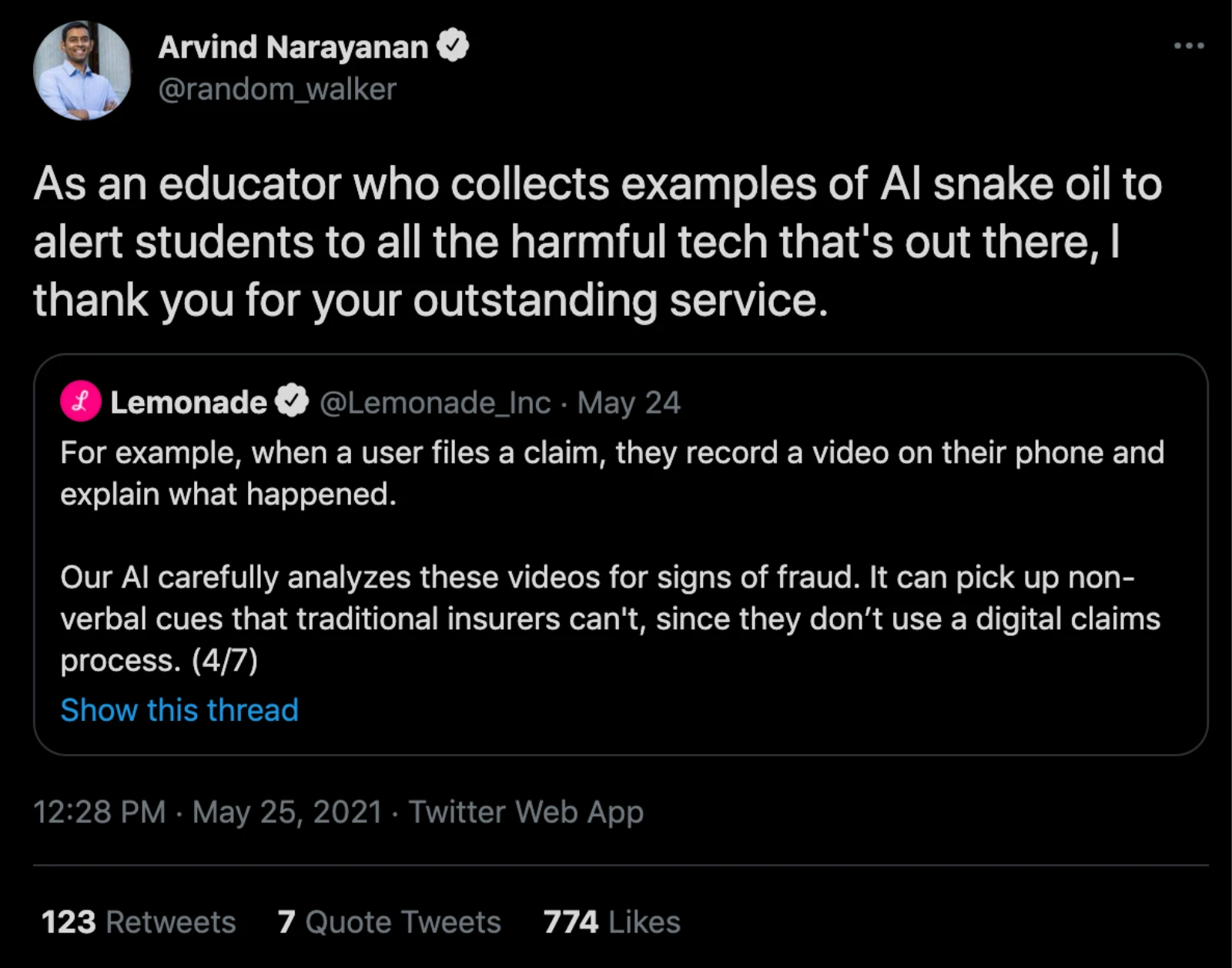

But Twitter was having none of it. Ai experts claimed that “emotion recognition” is “snake oil” pseudoscience that cannot be relied upon for fraud detection. This particular expert, thanked Lemonade for giving him great content on Ai Snake Oil that he could use to show his students.

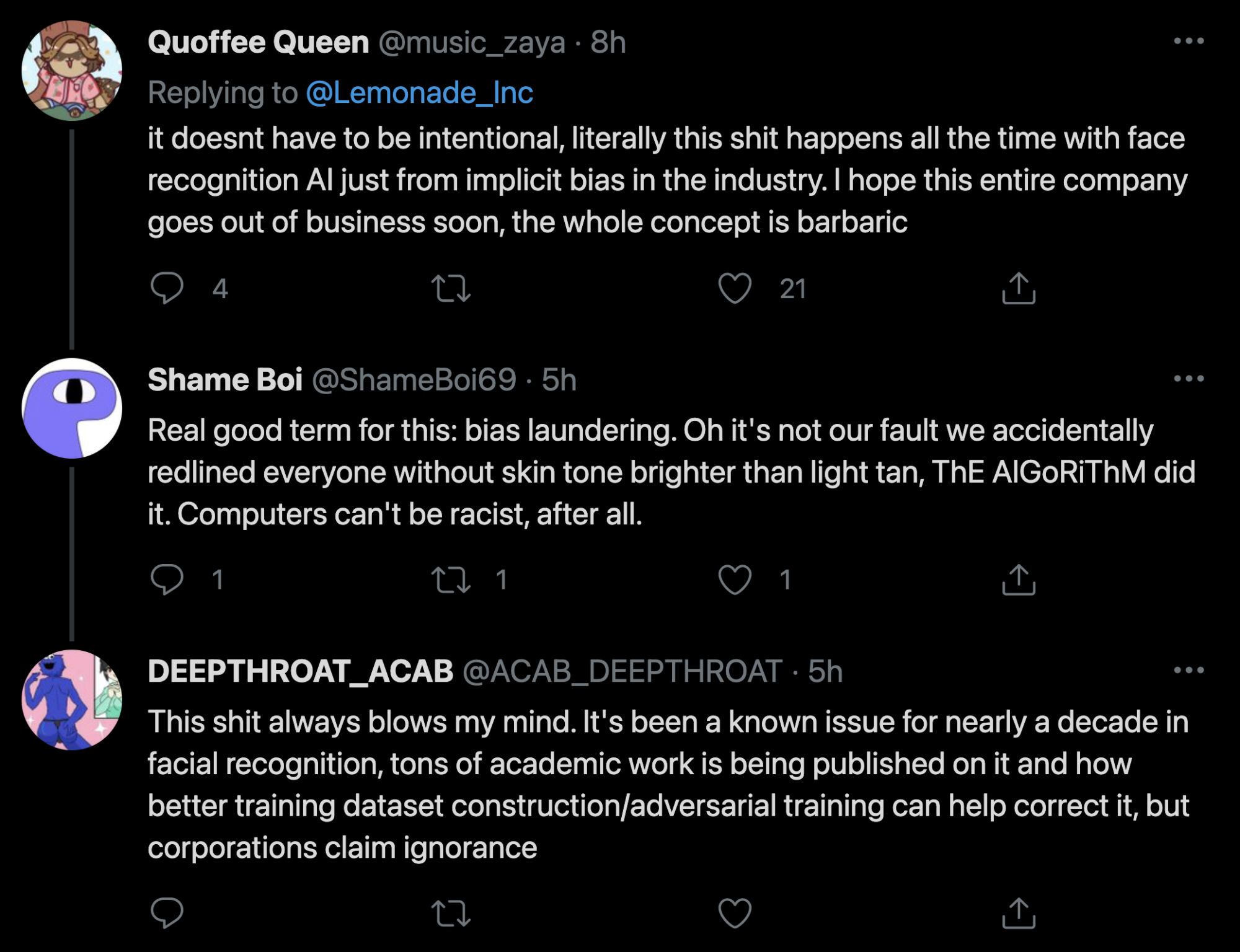

And many claimed that these solutions are biased and can inordinately impact certain ethnicities. Some of those comments can be seen here.

They Deleted The Tweet And Posted A Blog Explaining What They Do

By Wednesday of the same week, Lemonade had the deleted the “awful” tweet and attempted to explain themselves further in a blog post. Every claim that they flag is not automatically denied, rather sent to a human fraud investigator to scrutinize further.

“The term non-verbal cues was a bad choice of words to describe the facial recognition technology we’re using to flag claims submitted by the same person under different identities. These flagged claims then get reviewed by our human investigators, AI is non-deterministic and has been shown to have biases across different communities. That’s why we never let AI perform deterministic actions such as rejecting claims or canceling policies.”

Lemonades Explanations However Raise Concerns

As Motherboard explains in this article, “Lemonade has still left widespread confusion about how the technology at the foundation of its business works. The post says the company uses facial recognition technology, for example, but in its privacy policy it claims that it will never collect customers’ biometric information. And how it achieves 1,600 data points from a video of a person answering 13 questions without biometric information also isn’t clear.”

The in depth collection of video data and analyzing it against Ai tools make it clear that Lemonade must be storing at least some biometric data in order to train models to detect patterns of fraud.

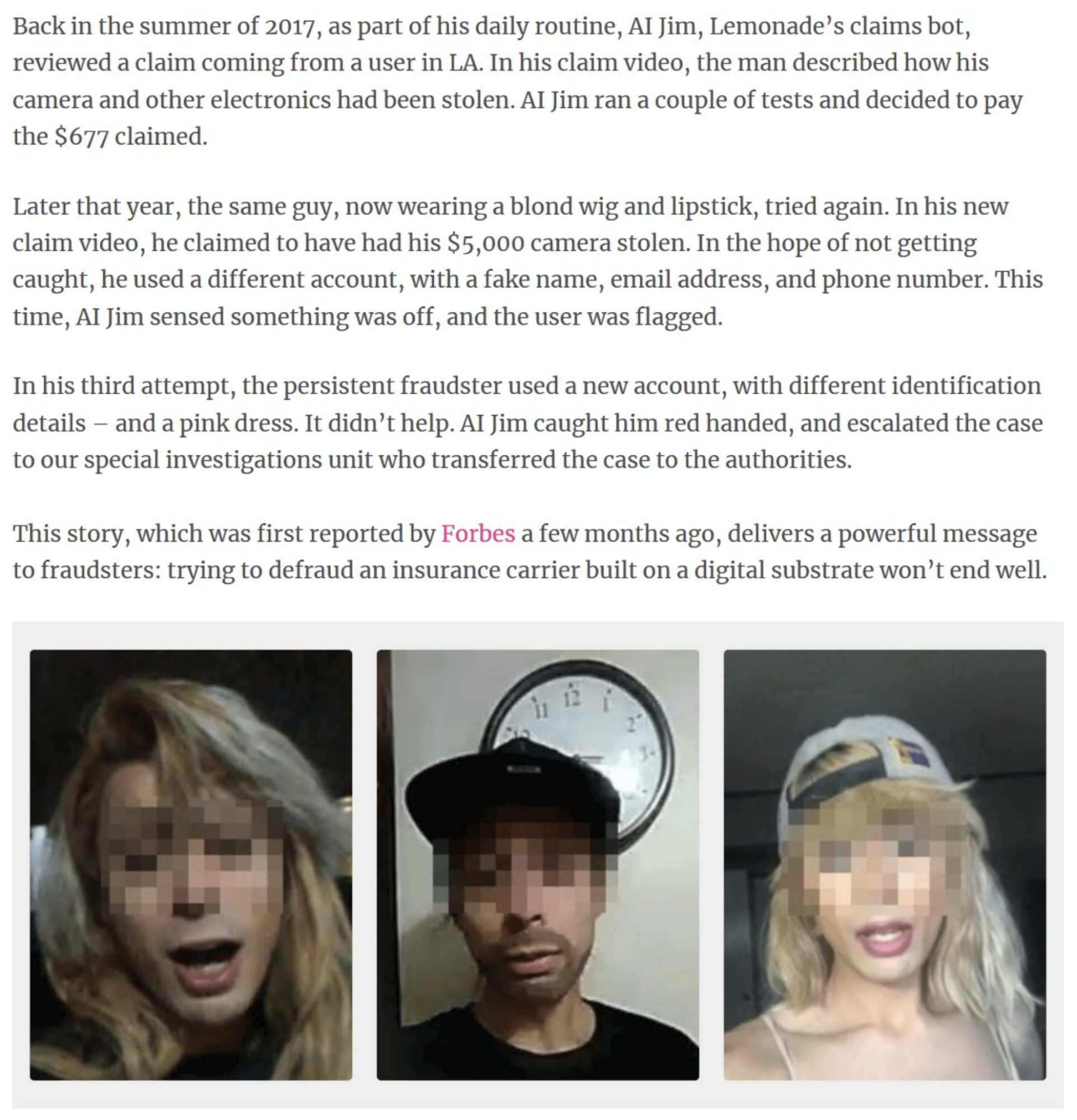

In fact, Lemonade posted an article about rooting out fraud in their videos that seemed to suggest that they have been doing this for awhile.

It would be difficult to detect this fraud without collecting biometric information from prior submitted insurance claims.

Racial Bias in Artificial Intelligence Particularly For Fraud Detection Has Become a Hot Topic

Facial recognition and artificial recognition is under fire after recent studies suggest that it is highly prone to racial bias. In October of 2020, Harvard University published this study which found that facial recognition algorithms performed the worst on darker-skinned females, with error rates up to 34% higher than for lighter-skinned males.

And Amazon recently has recognized the problem and placed an indefinite global ban on the use of their facial recognition technology – Rekognition by police and others. Some claim that the software has lead to false arrest.

There is plenty of room to grow for Ai in this field, and I believe that technology will catch up. But for the moment Lemonade has found themselves in hot water and may need to tread lightly.