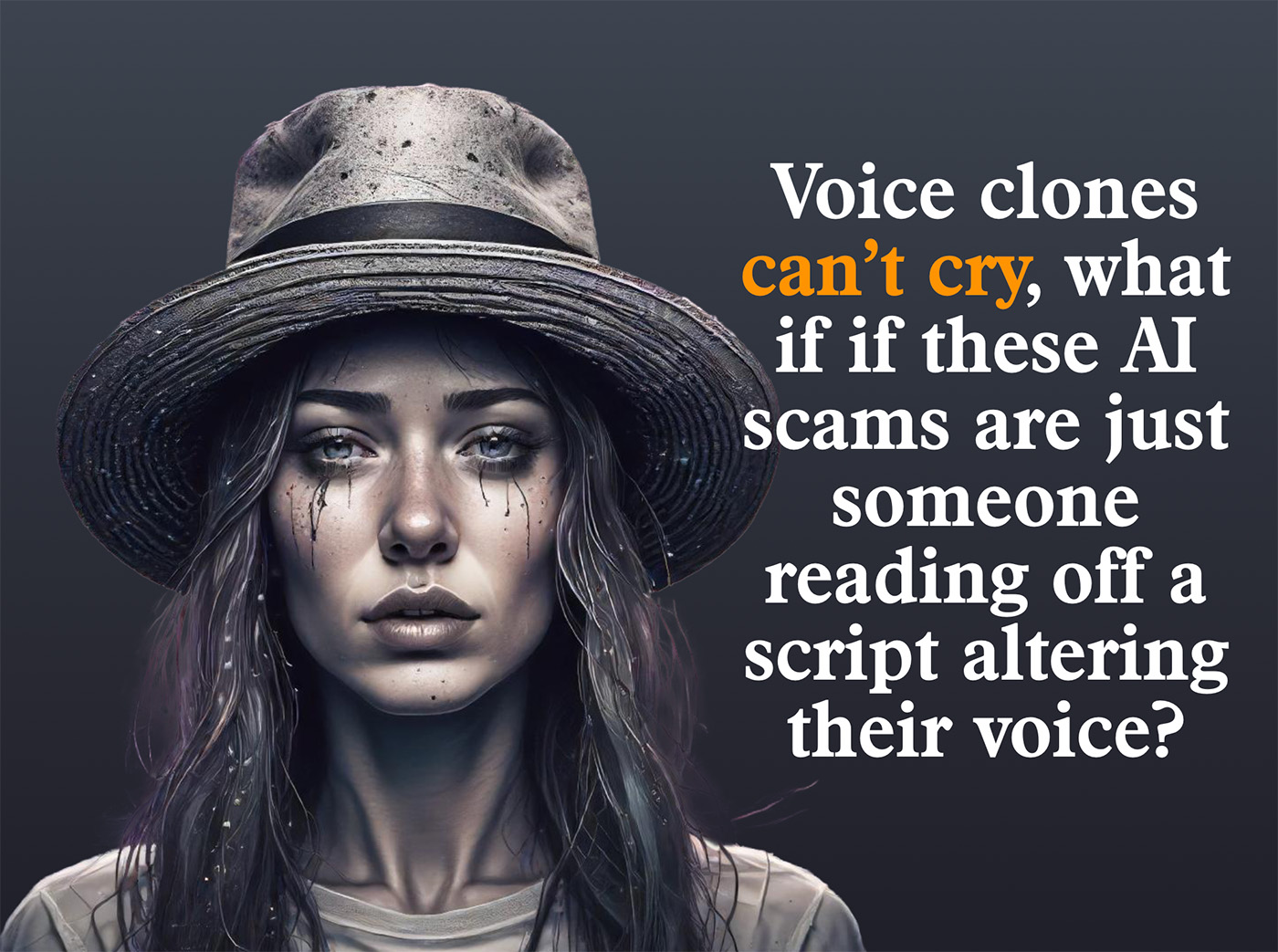

Something strange occurred to me the other day. I have read many articles about Voice Clone kidnapping scams. In each case, the victim (often a parent) claims that they received a deepfake call from their child who was crying.

There is only one problem with that. I have never found a voice cloning service that can make a deepfake cry. The voices cannot express any emotion. In fact, for the most part, they are very monotone.

What if these AI voice clone scams are not AI but someone altering their voice and reading off a script?

It’s hard to know since we’ve never heard from an actual perpetrator who conducted one of these voice scams.

But even as I pondered this question, I learned that two significant AI advancements emerged last week. And both made me realize that voice clones may not be able to cry yet, but they soon will be.

This will be a critical capability that scammers, fraudsters, and extortionists will rely upon to manipulate their victims.

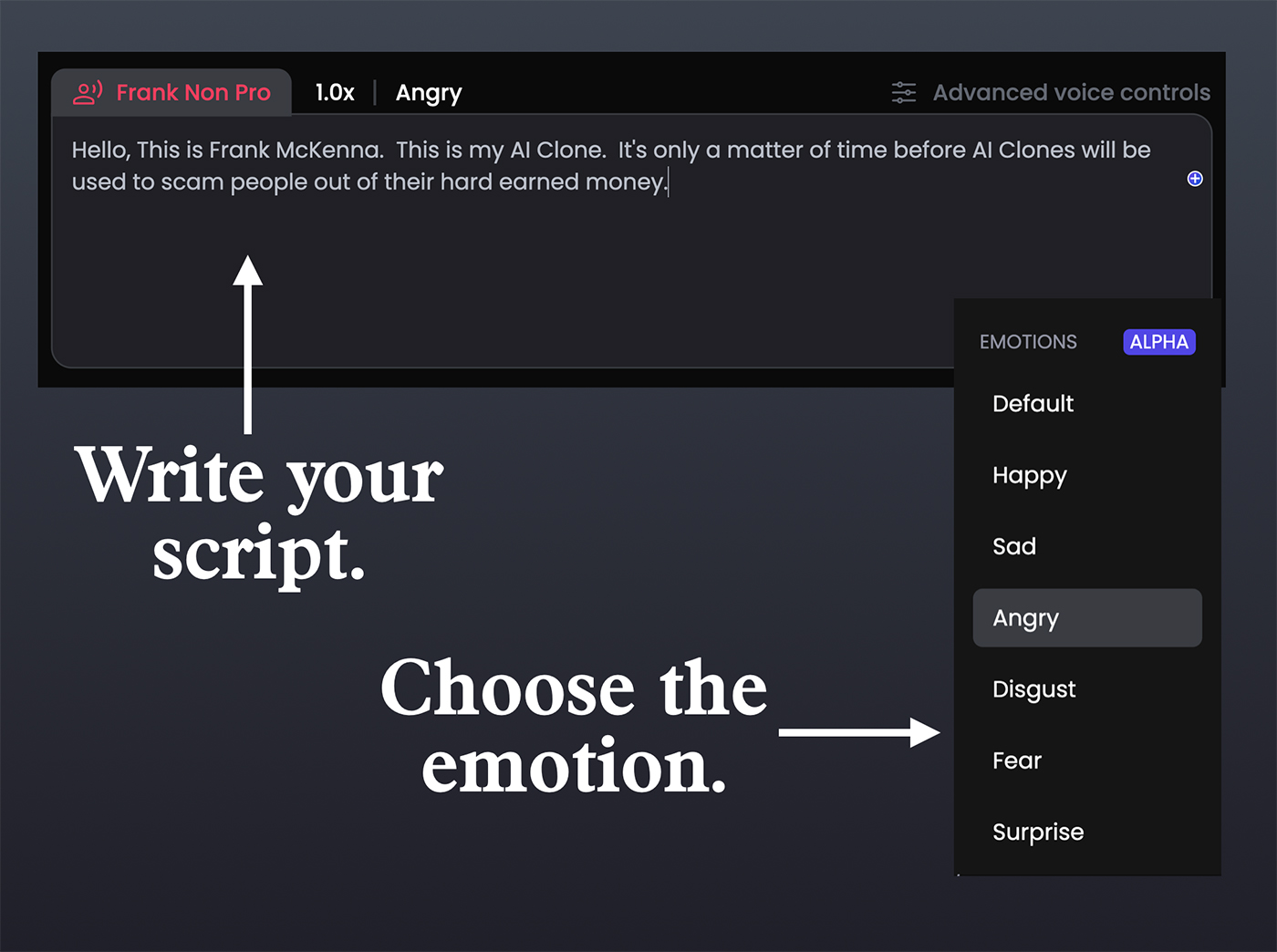

Introducing PlayHT – AI Voices With Emotion

Elevenlabs stand aside; a new and emerging AI voice called PlayHT is taking it to the next level. Their AI voices are hyper-realistic, and their ability to generate emotional speech, including laughter, sadness, fear, and happiness, is very, very good.

Their human emotion voice cloning is still in Beta, allowing the user to add emotion to anything typed into the generator.

The AI’s emotions that can be emulated include Happy, Sad, Anger, Disgust, Fear, and Surprise.

I Created A Voice Clone And Tried It With Human Emotions

I tried PlayHT’s instant voice clone tool. It took 30 seconds of my voice from a clip I recorded. It generated my AI clone in less than a minute and then I ran my voice through many of the different emotions.

Listen here. The results are not perfect, but definitely believable in most cases and far better than the standard monotone AI cloned voices I have done in the past.

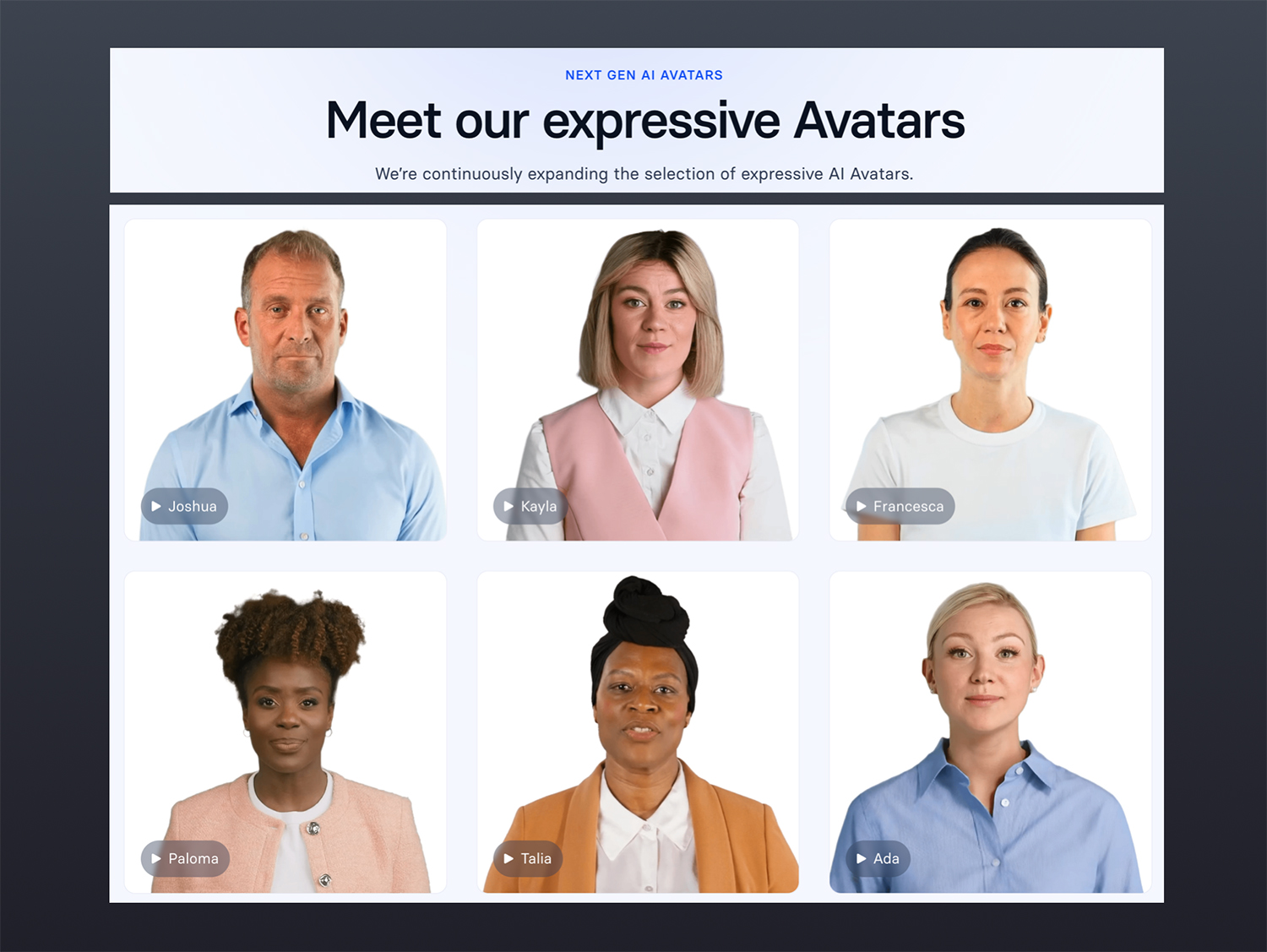

Synthesia Has Also Launched AI With Emotions, Too

Synthesia.io was one of the first companies to launch AI-based Avatars that could read scripts. While the earliest versions seemed great, they seemed very flat and robotic. But that has changed with their latest AI, which includes a range of emotions.

These new expressive Avatars produce more realistic clones and deepfakes will only appear that much more believable when they laugh, smile or even appear angry.

I created this Avatar using Francesca and wrote a script that would include a happy and serious tone.

For $1,000, you can create your own studio Avatar, a deepfake clone of yourself, using the same range of emotions in your videos.

So, Where is This All Going?

Deepfakes are progressing at an unprecedented rate. The ability for deepfakes to express emotions with voice and video will give romance scammers and BEC scammers that much more ability to con their victims and pressure them into giving up their money.

Imagine getting a video call from a very angry CEO demanding you send a wire transfer. If you don’t comply, you will be fired immediately!

Or imagine getting a call from your love interest, crying because they are in the hospital and need $10,000 to pay for a surgery. That might pull on your heart strings.

The era of emotional deepfakes has begun.