AI is coming for you. It’s going to take your job. ?? It’s going to steal your money. ? It’s going to give fraudsters a magic wand to do all their evil deeds. ?

Is this all hype, though?

For the present moment. Yes.

Could it happen in the future? Possibly, but not likely.

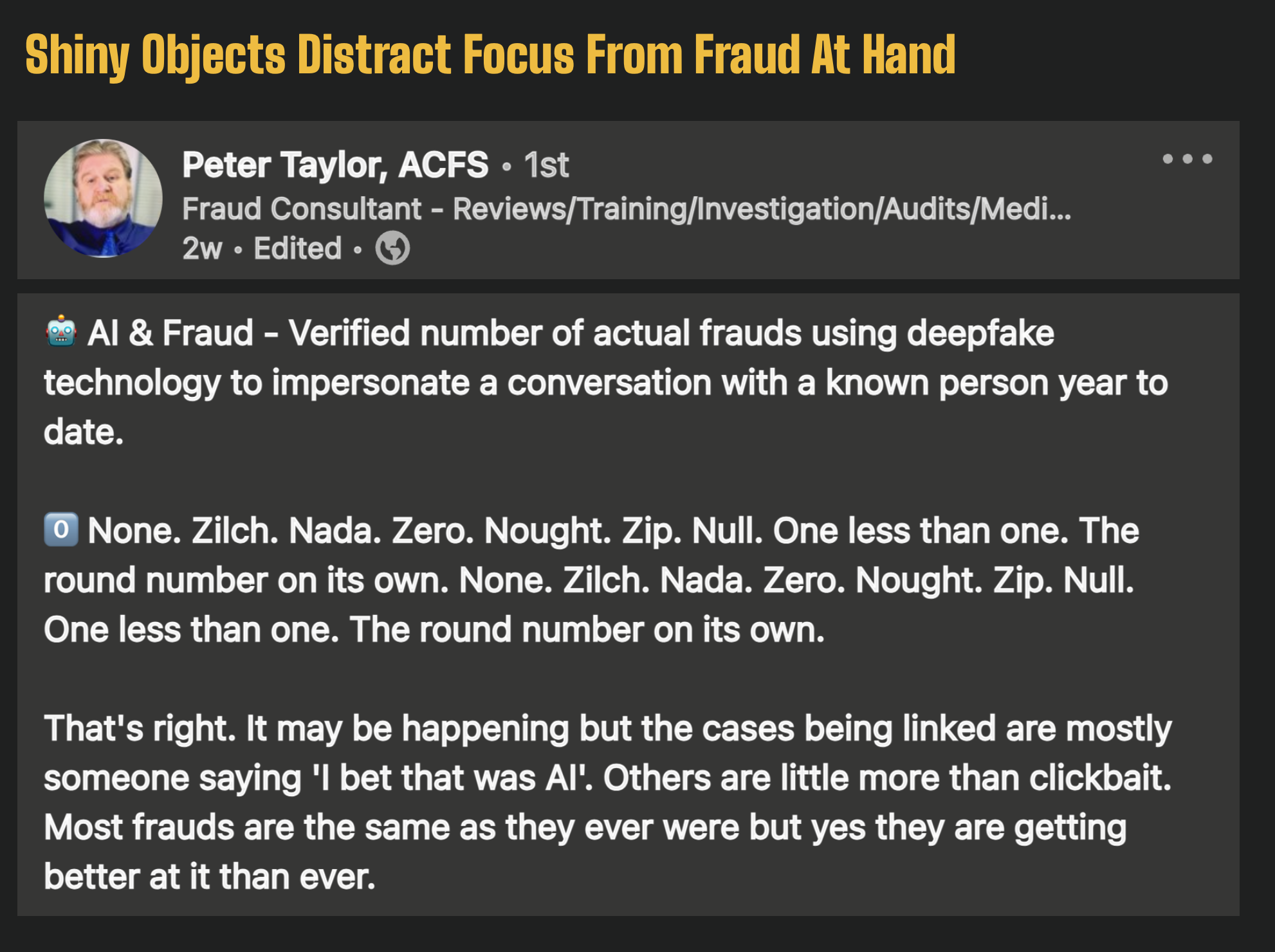

Well-respected and tenured fraud and cyber experts think the hype of AI in fraud is overblown. And worse, it might be distracting companies with shiny objects, preventing them from investing and focusing on the fraud happening right now.

One of those experts is Peter Taylor, an industry expert and fraud consultant.

DeepFake AI Reported- But Is It Really?

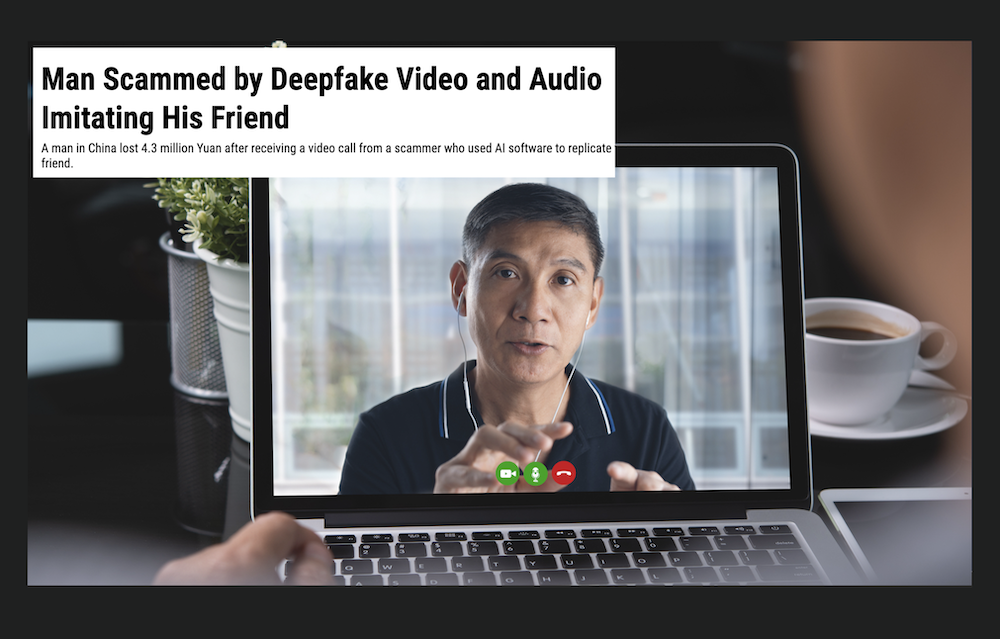

When I sat down to think about Peter’s question, I realized that the only single successful case of Deepfake an actual conversation came out of China.

Police said a fraudster used AI-powered face-swapping technology to scam a man out of $600,000

On April 20, a man surnamed Guo, a legal representative of a technology company in Fuzhou, Fujian province, received a video call via WeChat from a friend asking him for help.

Guo’s “friend,” said that he was bidding on a project in another city and wanted to use Guo’s company’s account to submit a bid of 4.3 million yuan. He promised to pay Guo back immediately.

The ruse was enough to convince Guo, and he sent two wire transfers to the scammer.

But what if this guy in China wasn’t really deepfaked by AI? What if it was something far more basic and far less technical?

What if it was nothing more than a basic FaceSwap and not DeepFake AI at all?

Are Talking Photos And Screen Sharing What Is Really Happening In DeepFake AI?

There are lots of talking photo apps freely available to anyone with an internet connection. And they often provide just enough capability for a scammer to use to fool a victim.

Scammers can upload a photo, and the application will animate it and create a video which can be downloaded.

The scammer will then do a screenshare with the victim and then talk as the video moves.

It sounds basic, but it many cases it does work.

This isn’t sophisticated DeepFake AI trickery at all.

CatFished Show How The DeepFakes Actually Work With A Victim

YouTube channel Catfished describes with one victim how he was duped with a very common “animated photo” scam.

The victim thought it all looked very strange but it wasn’t deepfake AI at all.

Too Many People Beating The AI Drum

Fraud experts like Brett Johnson think that solution vendors and some experts might be over-hyping the AI capability of scammers.

To him, fraudsters are going to use whatever works and DeepFake AI lacks one important component that make it not work – It’s not realtime. Deep Fakes are pre-recorded and that is the problem.

“The problem is way too any people are beating the AI Drum,” he said to me, “You don’t need AI when you have a very effective product like a talking photo”

Be Aware of AI, But Don’t Lose Focus Of What The Fraud At Hand Is

To me though, it’s not a matter of “if” fraudsters will adopt AI, it’s a matter of “when”. I think in the vast majority of fraud (99.9% or more) there is no clever AI behind it. It’s just the same thing as it’s ever been.

Fraud Fighters need to be aware of the threat of AI but don’t drop all your investment dollars as a company in trying to stop something that rarely ever happens.

Instead focus on the big stuff that is killing you today – Check Fraud, First Party Fraud, Mules and Scams. That is where the investment is needed.

Be aware of AI, but don’t lose focus of what the fraud at hand is.