The cat is out of the bag. ? AI has been unleashed into the world.

Everyone loves what it can do for them, and for their businesses. ?

But the fraudsters and scammers love it too, leveraging the tech in a new genre of fraud called “AI enabled fraud”.

And it’s actually happening right now.

A few months ago a guy name Guo in China fell for a deepfake realtime video and wire transferred over $700,000!

Now this might not be happening a lot yet, but it is bubbling up and it has fraud fighters concerned.

What are we going to do about this? ?

It turns out, there is hope against the battle against AI enabled fraud. And that could be AI itself.

You Be The Judge – Can You Spot The Clone?

AI voice and video cloning have gotten so good in the last 6 months. I don’t think most people can tell the real from the fake now.

Last month, I created an audio clone and it was so darn good, I did a test.

I released the clone and a real recording and asked people to tell me which was real and which was fake. You can test them for yourself here.

First Video – Is This Really Me, Or A Clone?

Second Video – Is This Really Me, Or A Clone?

My Friends and Even My Family Could Not Tell The Difference

I had about 60 people that responded to my survey and took the test. 80% of people incorrectly identified the clone as my true voice.

Even my friends and family could not tell!

Then I Turned The Recordings Over To See If Artificial Intelligence Could Tell

Fraud fighter Jake Emry sat stumped at his desk. He listened to the recordings over and over again trying to figure out which was real and which was fake. They both sounded real, but then again maybe they both sounded off.

He honestly could not tell.

And then he called me with this idea ➡️ What if we turned the recordings over to AI and see if they could tell.

Now that is an amazing idea! So we did just that.

I Turned to The Scientist at NICE Actimize

It turns out the scientists at NICE Actimize have been working on the problem of voice cloning for awhile And they have developed Deep Neural Network AI that has superhuman capability.

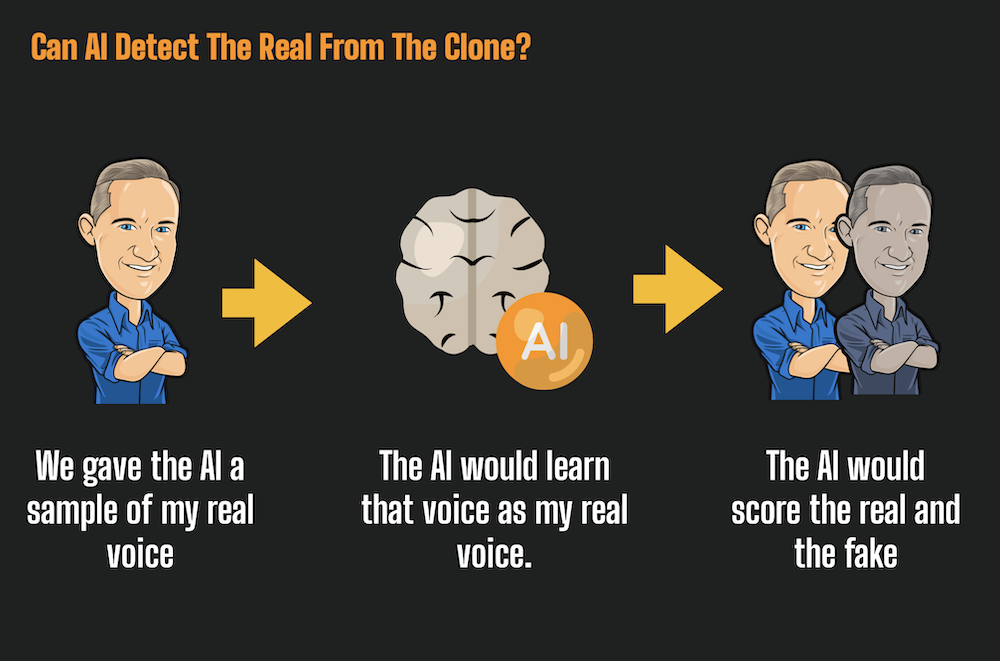

So they recommended that we test my clone scientifically with this process

- We would give the AI a sample of my real voice (a short recording).

- The AI would use that as a sample of my real voice.

- We would give the AI the real and fake voice and see if it could tell.

So I recorded a sample of my voice for the data scientist to use.

AI Nailed It, It Wasn’t Even Close

The next day, Jake called me and said “It wasn’t even close, one of those recordings is off the charts fake”.

But did it pick the right voice as fake?

It turns out it did. Check out the AI results here. ?

I was surprised at how well the AI successfully separated the difference from the real and the fake when to the human ear it was so close.

Why Did It Work? It Turns Out, It’s Science!

So why was AI better than the human in spotting my voice clone. It turns out there is a lot of science behind it.

First, NICE Actimize uses ‘1,000’s of parameters’ of speech, and their risk engine turns those frequencies of that speech into ratios.

Those parameters include things like dialect, pronunciation, amplitude, manner of speech, speed of speech.

Then, specific algorithms using DNN (deep neural networks) to identify the actual synthetic nature of the speech. Think of this like voice printing which can tell such nuances such as the gender of the speaker.

Maybe I didn’t do a great job explaining it but you can check out this video here that explains it very well.

Voice Biometrics Work And Should Be Considered Part Of A Good Defensive Fraud Strategy

With the explosion of voice cloning, I think Voice Biometrics might take more of a front seat in terms of investments in fraud.

I asked Yuval Marco, GM at NICE Actimize to weigh in on the matter. He think Generative AI and deepfake tech is going to impact scams.

This is what he says about it. ?

“Advancements in generative AI, in combination with readily accessible deep fake technologies, will amplify the current risk of scams to new levels. As a result, organizations will need to adopt an integrated and multi-layered approach which includes advanced authentication controls that can identify AI-generated artifacts, while adopting behavioral analytics as another layer of defense to identify anomalous and risky behavior.”

Don’t Be The Horse and Buggy Driver Yelling At Cars

In 1910, Horse and Buggy operators raised a stink about cars and how they would never work.

They tried their best to keep things just the way they were because they thought the horse and buggy wasn’t the future – horses were.

We’re at the same point with AI. Don’t the horse and buggy driver yelling at cars now!

Thanks for reading. This was a fun test to try out and I just want to give a shout out to all those data scientists out there working on tech to help us combat scams! ??