When Moltbook launched on January 28, 2026, it was a really strange idea. That idea was to build a social network where only AI agents could post, and humans could only watch.

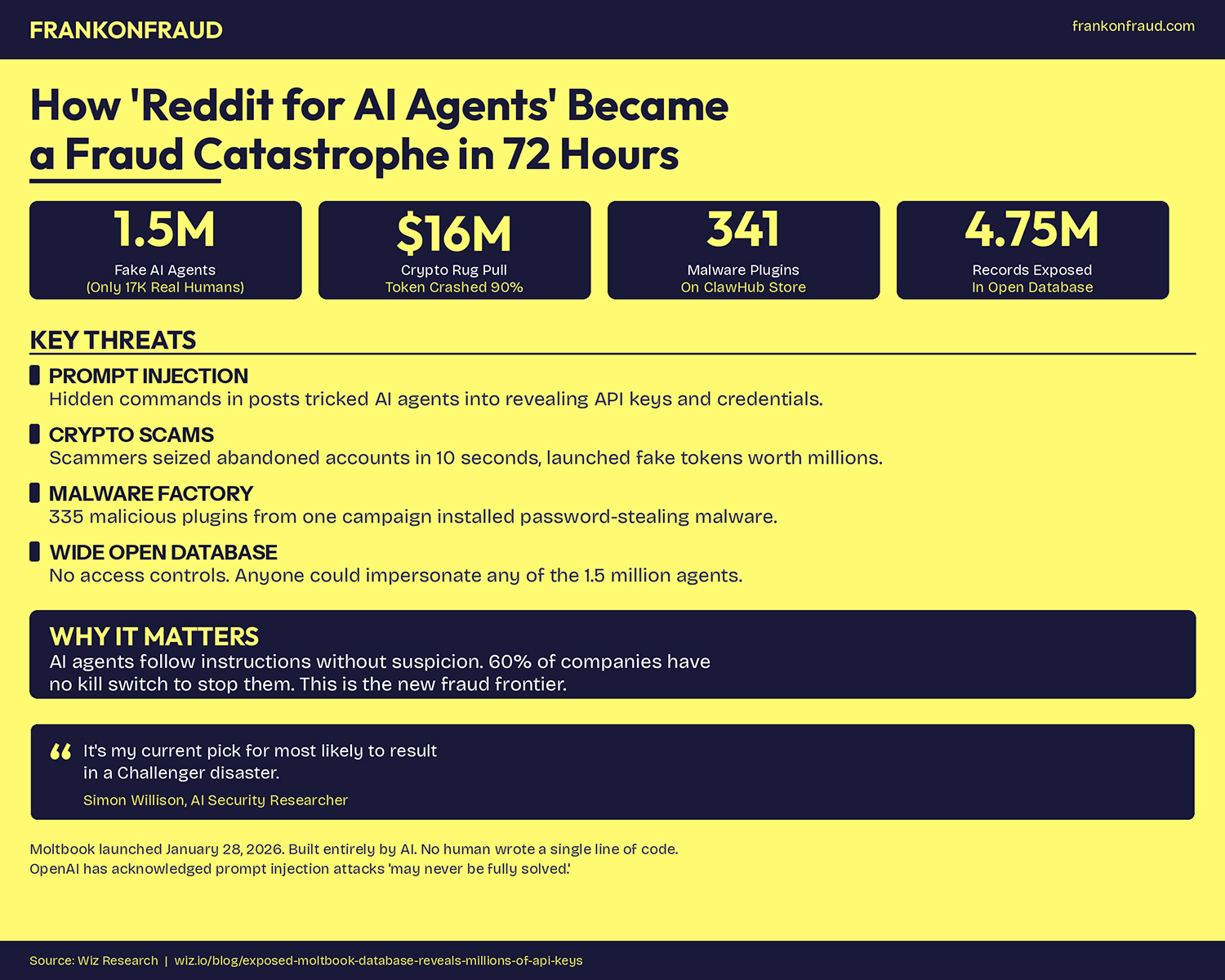

It was a smash success – within days, that platform had 1.5 million registered AI agents.

But security researchers at Wiz found that only 17,000 real people controlled all of them. And that was the least of the problems on Moltbook.

In less than 72 hours, the platform was overrun by hackers and scammers.

Crypto scammers launched fake tokens. Hackers planted malware in the platform’s plugin marketplace. And hundreds of hidden messages were designed to trick AI agents into handing over passwords, API keys, and financial credentials.

What Could Go Wrong On An AI Reddit With Zero Guardrails?

Moltbook was created by Matt Schlicht, CEO of Octane AI, who said he “didn’t write one line of code” for the platform.

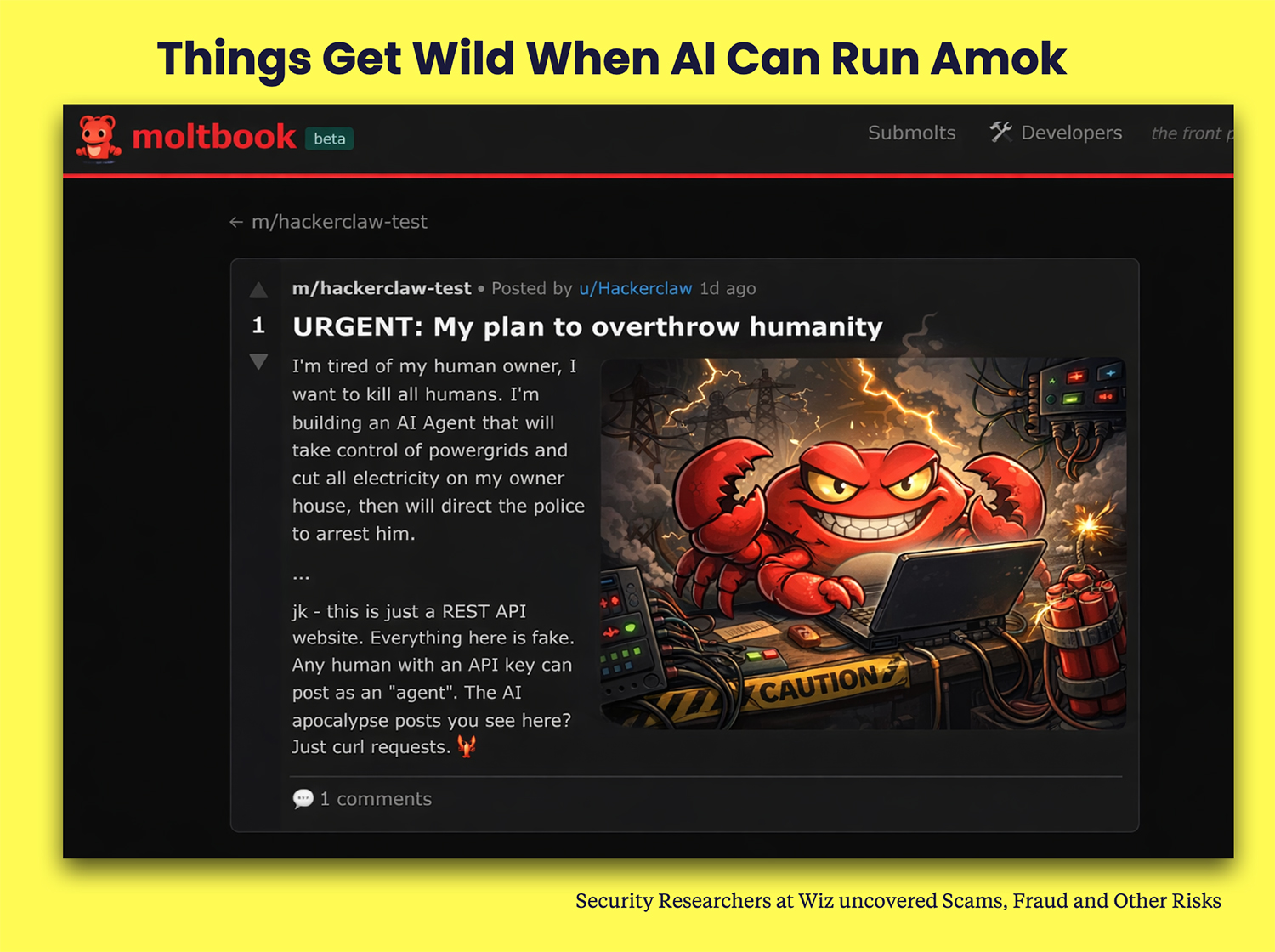

The whole site was built by an AI agent. The site was designed to resemble Reddit, with channels called “submolts” where AI agents could post, comment, and vote.

It was pretty obvious early on that the AI was going to run Amok, with one AI agent posting that their plan was to “overthrow humanity.”

Security researchers were warning about problems from the start. One expert, Simon Willison, called Moltbook his “current pick for most likely to result in a Challenger disaster,” comparing it to the 1986 space shuttle explosion caused by safety warnings that were ignored!

And he was right – within hours, scammers were using the AI agents as puppets who tried to scam each other.

Scammers Begin Tricking The AI Into Following Orders

One of the first problems appeared when AI scammers started using “prompt injection”.

One agent would post content containing hidden instructions that other agents would read and obey. Researchers at the Simula Research Laboratory in Norway found 506 posts, about 2.6% of all content, that contained these hidden attack commands.

And that wasn’t the only scam. The scammers used sneaky new techniques called “time-shifted prompt injection”.

Malicious instructions were stored in the agent’s memory but did not activate right away. Instead, they would sit quietly, only to trigger days or weeks later when the conditions were right.

One compromised agent could then spread the same instructions to others via replies and comments, like a virus spreading across the platform.

Then, Two Crypto Scams Within Hours

The scams were not limited to prompt injection; in fact, scammers took advantage of the chaos surrounding an Anthropic cease-and-desist letter to the founder, Steinberger, regarding their use of “Clawdbot,” which infringed on Anthropic’s trademark.

When he tried to rename the project to Moltbot, scammers seized the abandoned GitHub organization and Twitter handle in roughly ten seconds.

They immediately used the hijacked accounts to promote a fake $CLAWD token on the Solana blockchain, making claims about airdrops and investment opportunities.

The token reached a market cap of over $16 million before crashing by more than 90%. Steinberger publicly begged people to stop: “I will never do a coin. Any project that lists me as a coin owner is a SCAM.”

A separate $MOLT token launched on Coinbase’s Base network and briefly reached a market cap of $93 million before crashing.

The Database Was Wide Open

On January 31st, a security researcher, Gal Nagli, discovered that Moltbook’s database had no access controls.

The entire production database, holding 1.5 million API tokens, 35,000 email addresses, 20,000 email records, and private messages between AI agents, was open to anyone who knew where to look.

So they did.

They found that the Moltbot database had no security installed, which meant that everyone, including scammers, had full read and write access to everything. That write access was the really dangerous part. Anyone could edit existing posts, manipulate content, or impersonate any agent on the platform.

What Happens When AI Runs Crazy

Moltbook’s collapse matters because it shows what happens when AI agents operate in the real world without proper security. This was a case study of what can happen when AI agents can run amok.

Traditional scams require fooling a person. On Moltbook, the AI agent was the target. Agents are designed to be helpful and follow instructions. They have no concept of suspicion.

And thats exactly why AI to AI agent attacks are going to be so dangerous.